Not every website exposes their data through a JSON API: in many cases the HTML page shown to users is all you get. This tutorial will walk you through using Scala to scrape useful information from human-readable HTML pages, unlocking the ability to programmatically extract data from online websites or services that were never designed for programmatic access via an API.

About the Author: Haoyi is a software engineer, and the author of many open-source Scala tools such as the Ammonite REPL and the Mill Build Tool. If you enjoyed the contents on this blog, you may also enjoy Haoyi's book Hands-on Scala Programming

To work with these human-readable HTML webpages, we will be using the Ammonite Scala REPL along with the Jsoup HTML query library. To begin with, I will install Ammonite:

$ sudo sh -c '(echo "#!/usr/bin/env sh" && curl -L https://github.com/lihaoyi/Ammonite/releases/download/1.6.8/2.13-1.6.8) > /usr/local/bin/amm && chmod +x /usr/local/bin/amm'

And then use import $ivy to download the latest version of Jsoup:

$ amm

Loading...

Welcome to the Ammonite Repl 1.6.8

(Scala 2.13.0 Java 11.0.2)

If you like Ammonite, please support our development at www.patreon.com/lihaoyi

@ import $ivy.`org.jsoup:jsoup:1.12.1`, org.jsoup._

https://repo1.maven.org/maven2/org/jsoup/jsoup/1.12.1/jsoup-1.12.1.pom

100.0% [##########] 8.1 KiB (4.1 KiB / s)

https://repo1.maven.org/maven2/org/jsoup/jsoup/1.12.1/jsoup-1.12.1-sources.jar

100.0% [##########] 182.3 KiB (55.6 KiB / s)

https://repo1.maven.org/maven2/org/jsoup/jsoup/1.12.1/jsoup-1.12.1.jar

100.0% [##########] 387.8 KiB (67.1 KiB / s)

import $ivy.$

Next, we can follow the first example in the Jsoup documentation and call org.jsoup.Jsoup.connect in order to download a simple web page to get started:

@ val doc = Jsoup.connect("http://en.wikipedia.org/").get()

doc: nodes.Document = <!doctype html>

<html class="client-nojs" lang="en" dir="ltr">

<head>

<meta charset="UTF-8">

<title>Wikipedia, the free encyclopedia</title>

...

@ doc.title()

res4: String = "Wikipedia, the free encyclopedia"

@ val headlines = doc.select("#mp-itn b a")

headlines: select.Elements = <a href="/wiki/2019_Kashmir_earthquake" title="2019 Kashmir earthquake">An earthquake</a>

<a href="/wiki/R_(Miller)_v_The_Prime_Minister_and_Cherry_v_Advocate_General_for_Scotland" title="R (Miller) v The Prime Minister and Cherry v Advocate General for Scotland">unanimously rules</a>

<a href="/wiki/2019_British_prorogation_controversy" title="2019 British prorogation controversy">September 2019 prorogation of Parliament</a>

<a href="/wiki/2I/Borisov" title="2I/Borisov">2I/Borisov</a>

...

@ import collection.JavaConverters._

import collection.JavaConverters._

@ for(headline <- headlines.asScala) yield (headline.attr("title"), headline.attr("href"))

res9: collection.mutable.Buffer[(String, String)] = ArrayBuffer(

("2019 Kashmir earthquake", "/wiki/2019_Kashmir_earthquake"),

("2019 British prorogation controversy", "/wiki/2019_British_prorogation_controversy"),

("2I/Borisov", "/wiki/2I/Borisov"),

("71st Primetime Emmy Awards", "/wiki/71st_Primetime_Emmy_Awards"),

...

@ for(headline <- headlines.asScala) yield headline.text

res10: collection.mutable.Buffer[String] = ArrayBuffer(

"An earthquake",

"unanimously rules",

"September 2019 prorogation of Parliament",

"2I/Borisov",

...

This snippet front page of Wikipedia as a HTML document, then extracts the links and titles of the "In the News" articles.

Now that we've run through the simple example from the Jsoup website, let's look into more detail what we just did:

@ val doc = Jsoup.connect("http://en.wikipedia.org/").get()

doc: nodes.Document = <!doctype html>

<html class="client-nojs" lang="en" dir="ltr">

<head>

<meta charset="UTF-8">

<title>Wikipedia, the free encyclopedia</title>

...

Most functionality in the Jsoup library lives on org.jsoup.Jsoup. Above we used .connect to ask Jsoup to download a HTML page from a URL and parse it for us, but we can also use .parse to parse a string we have available locally:

@ Jsoup.parse("<html><body><h1>hello</h1><p>world</p></body></html>")

res10: nodes.Document = <html>

<head></head>

<body>

<h1>hello</h1>

<p>world</p>

</body>

</html>

For example, .parse could be useful if we already downloaded the HTML files ahead of time, and just need to do the parsing without any fetching.

While Jsoup provides a myriad of different ways for querying and modifying a document, we will focus on just a few: .select, .text, and .attr

@ import collection.JavaConverters._

import collection.JavaConverters._

@ val headlines = doc.select("#mp-itn b a").asScala

headlines: collection.mutable.Buffer[nodes.Element] = Buffer(

<a href="/wiki/2019_Kashmir_earthquake" title="2019 Kashmir earthquake">An earthquake</a>,

<a href="/wiki/R_(Miller)_v_The_Prime_Minister_and_Cherry_v_Advocate_General_for_Scotland" title="R (Miller) v The Prime Minister and Cherry v Advocate General for Scotland">unanimously rules</a>,

<a href="/wiki/2019_British_prorogation_controversy" title="2019 British prorogation controversy">September 2019 prorogation of Parliament</a>,

...

.select is the main way you can query for data within a HTML document. It takes a CSS Selector string, and uses that to select one or more elements within the document that you may be interested in. The basics of CSS selectors are as follows:

foo selects all elements with that tag name, e.g. <foo />

#foo selects all elements with that ID, e.g. <div id="foo" />

.foo selects all elements with that ID, e.g. <div class="foo" />. It also works with multiple classes, e.g. <div class="foo bar qux " />, as long as one of the classes matches

Selectors combined without spaces selects elements that support all of them, e.g. foo#bar.qux would match an element <foo id="bar" class="qux" />

Selectors combined with spaces finds elements that support the leftmost selector, then any (possibly nested) child elements that supports the next selector, and so forth. e.g. foo #bar .qux would match the innermost div in <foo><div id="bar"><div class="qux" /></div></foo>

If you want to select only direct children, ignoring grandchildren and other elements nested more deeply within the HTML page, you can use the > character to do so, e.g. foo > #bar > .qux

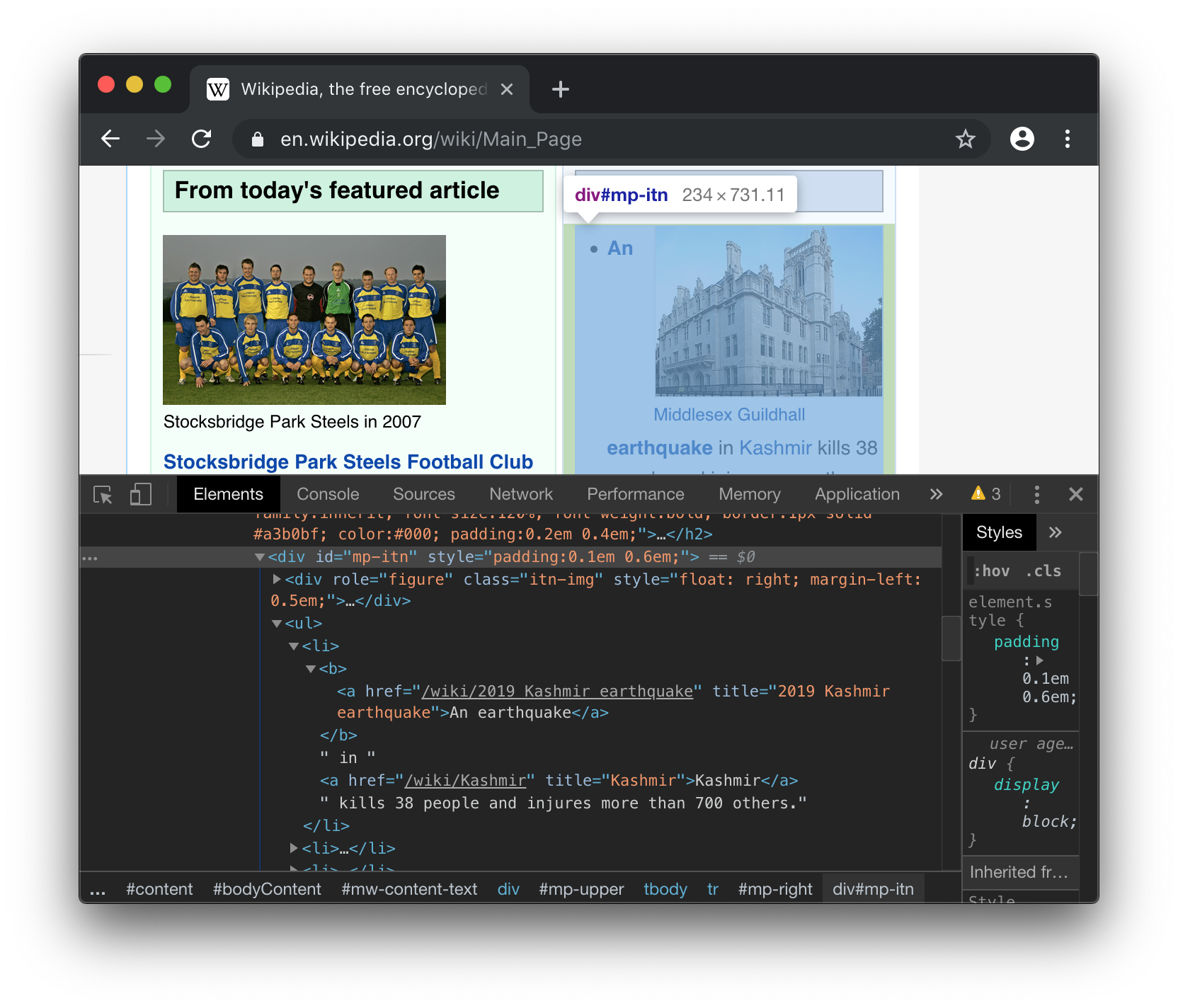

To come up with the selector that would give us the In the News articles, we can go to Wikipedia in the browser and right-click Inspect on the part of the page we care about:

Here, we can see

The enclosing <div> of that section of the page has id="mp-itn", meaning we can select for it using #mp-itn.

Within that div, we have an <ul> unordered list full of <li> list items.

Within each list item is a mix of text and other tags, but we can see that the links to each article are always bolded in a <b> tag, and inside the <b> there is an <a> link tag

Thus, in order to select all those links, we can combine #mp-itn b and a into a single doc.select("#mp-itn b a") call.

Apart from .select, you also have convenience methods like .next, .nextAll, .nextSibling, .nextElementSibling, etc. to help you conveniently find what you want within the HTML document. We will find some of these useful later.

Now that we've gotten the elements that we want, the next step would be to get the data we want off of each element. HTML elements have three main things we care about:

Attributes of the form foo="bar", which Jsoup gives you via .attr("foo")

Text contents, e.g. <foo>hello world</foo>, which Jsoup gives you via .text

Direct child elements, which Jsoup gives you via .children.

Once we have the elements we want, it is more convenient to convert it into a Scala collection to work with:

@ import collection.JavaConverters._

import collection.JavaConverters._

This lets us call .asScala on the resultant element list, so we can easily iterate over it and pick out the parts we want. Whether attributes like the mouse-over title or the link target href:

@ for(headline <- headlines.asScala) yield (headline.attr("title"), headline.attr("href"))

res9: collection.mutable.Buffer[(String, String)] = ArrayBuffer(

("2019 Kashmir earthquake", "/wiki/2019_Kashmir_earthquake"),

("2019 British prorogation controversy", "/wiki/2019_British_prorogation_controversy"),

("2I/Borisov", "/wiki/2I/Borisov"),

("71st Primetime Emmy Awards", "/wiki/71st_Primetime_Emmy_Awards"),

...

Or the text of the link the user will see on screen:

@ for(headline <- headlines.asScala) yield headline.text

res10: collection.mutable.Buffer[String] = ArrayBuffer(

"An earthquake",

"unanimously rules",

"September 2019 prorogation of Parliament",

"2I/Borisov",

...

Thus, we are able to pick out the list of Wikipedia articles - and their titles and URLs - by using Jsoup to scrape the Wikipedia front page.

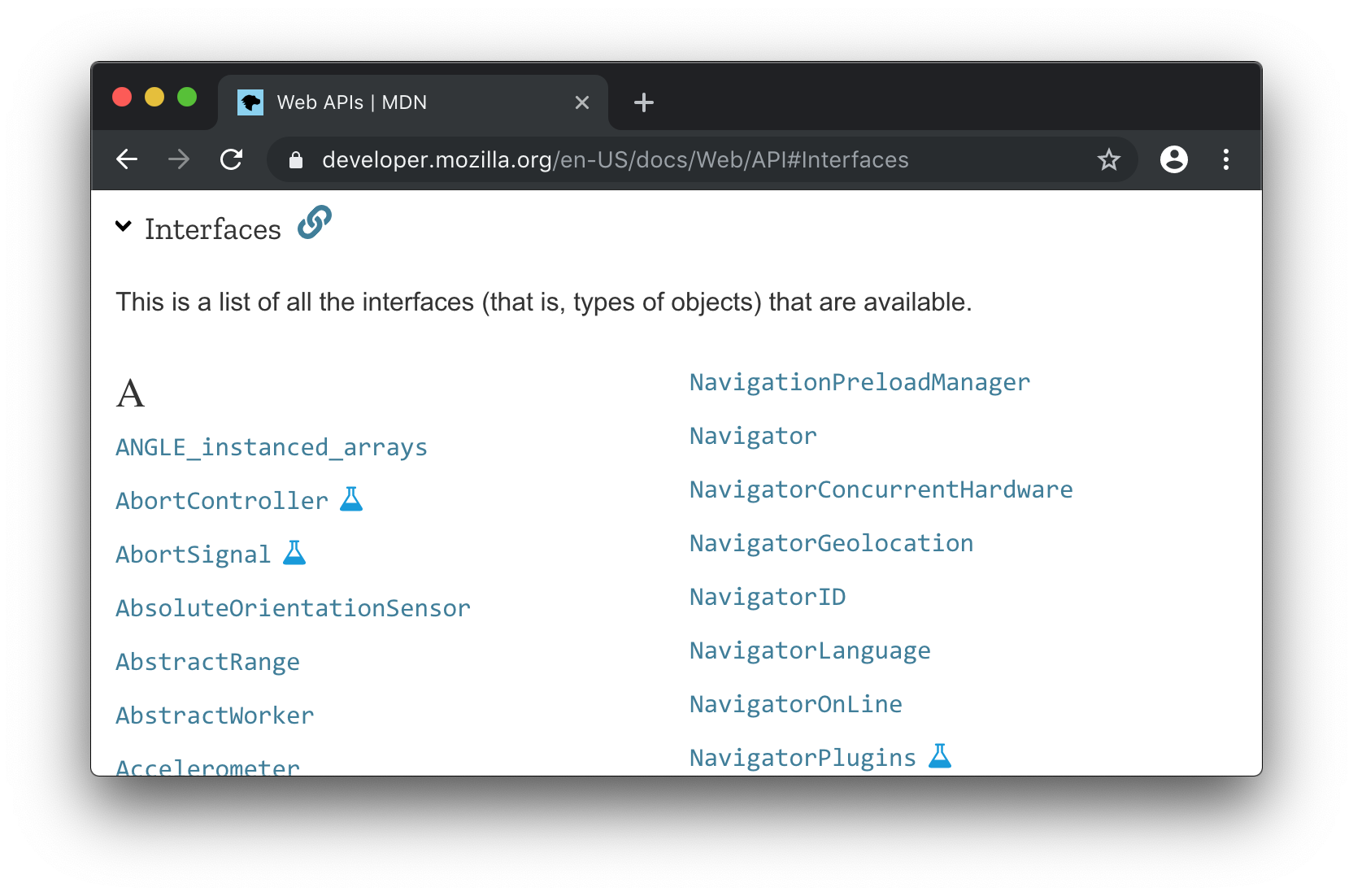

One source of semi-structured data is the Mozilla Development Network web API documentation:

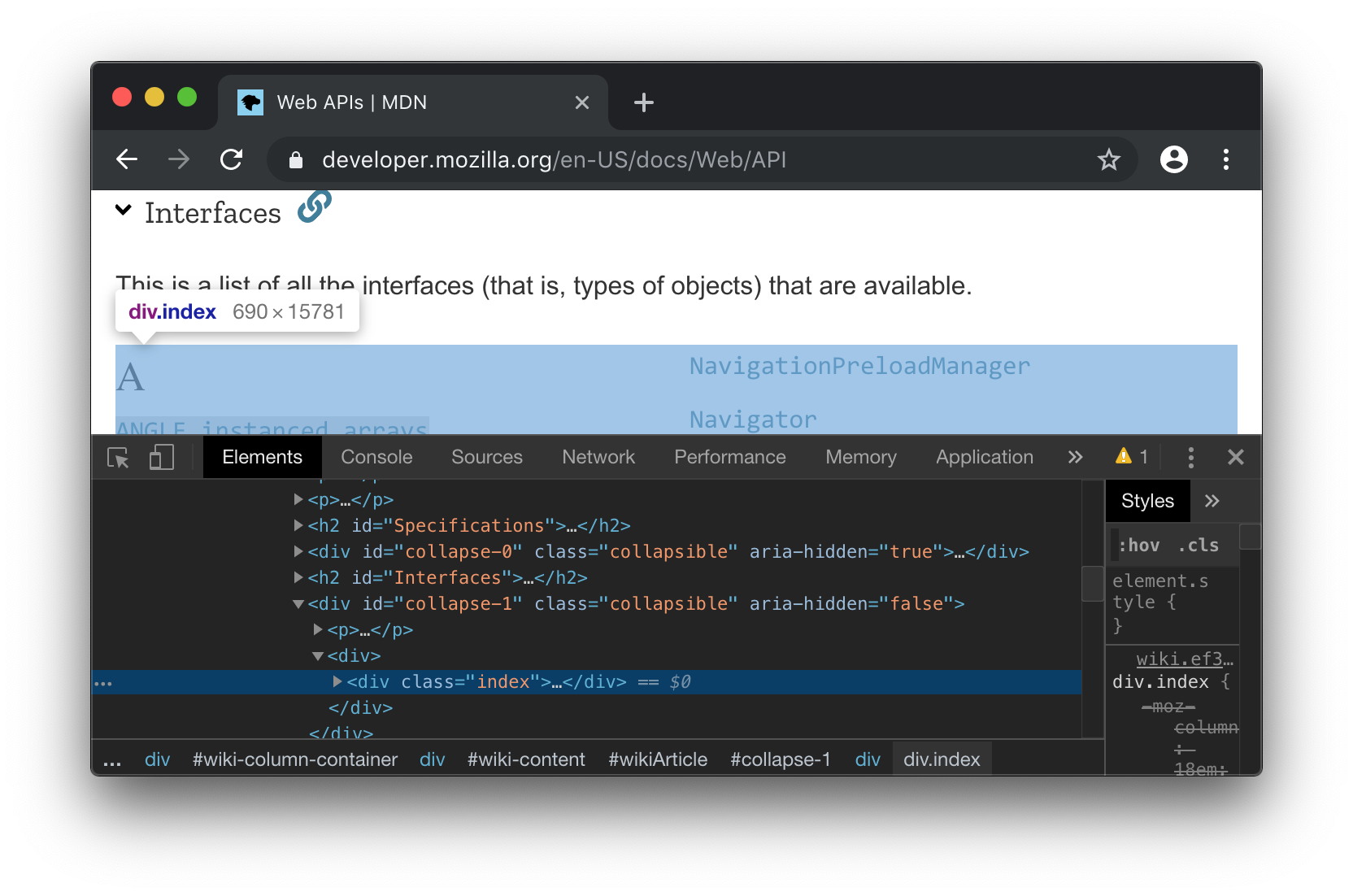

This website contains manually-curated documentation for the plethora of APIs you have available when writing Javascript code to run in the browser, under the Interfaces section, as shown below:

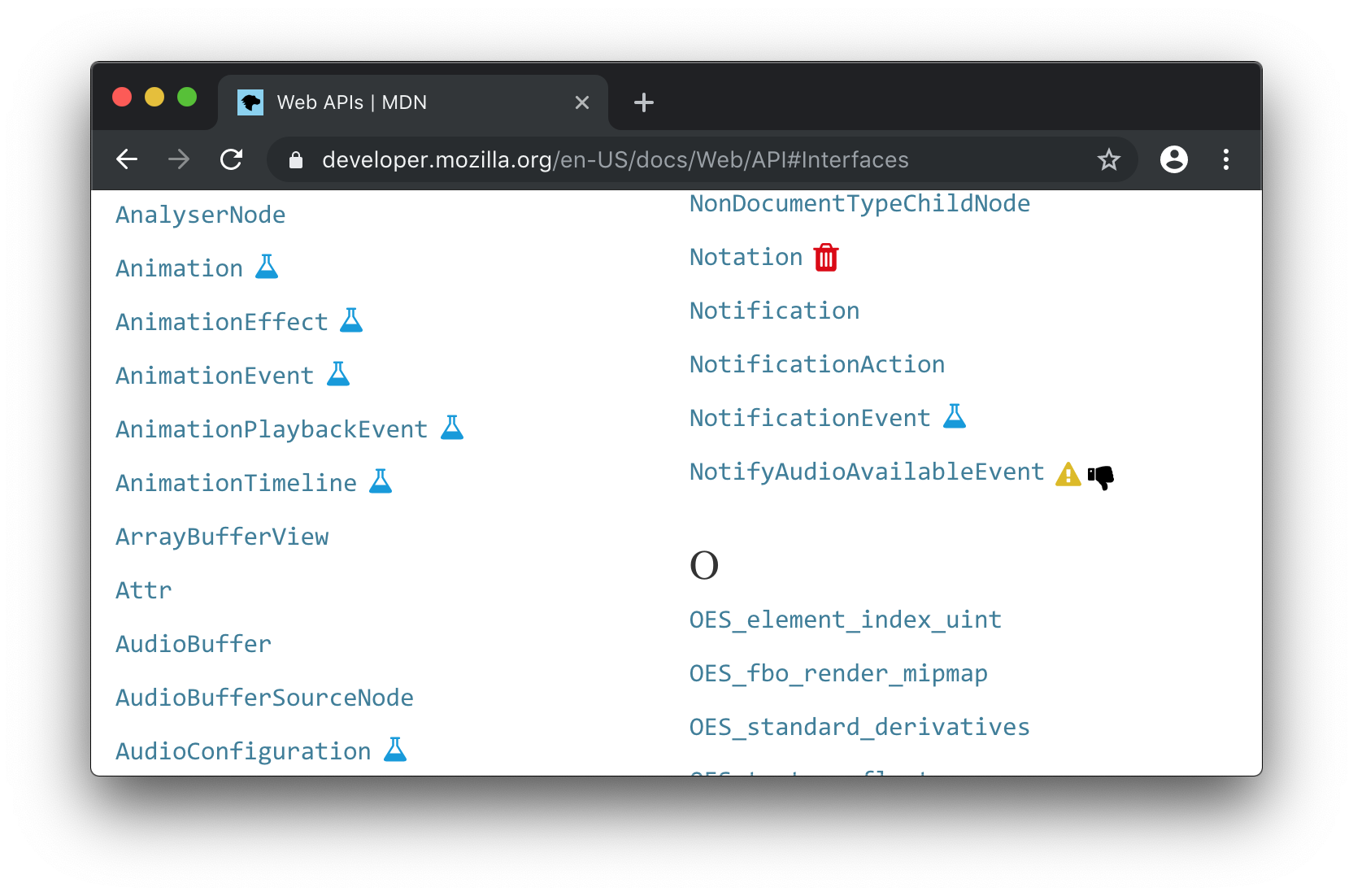

The various APIs are also tagged: with a blue beaker icon to mark experimental APIs, a black thumbsdown to mark deprecated APIs, and a red trashcan to mark those which have already been removed:

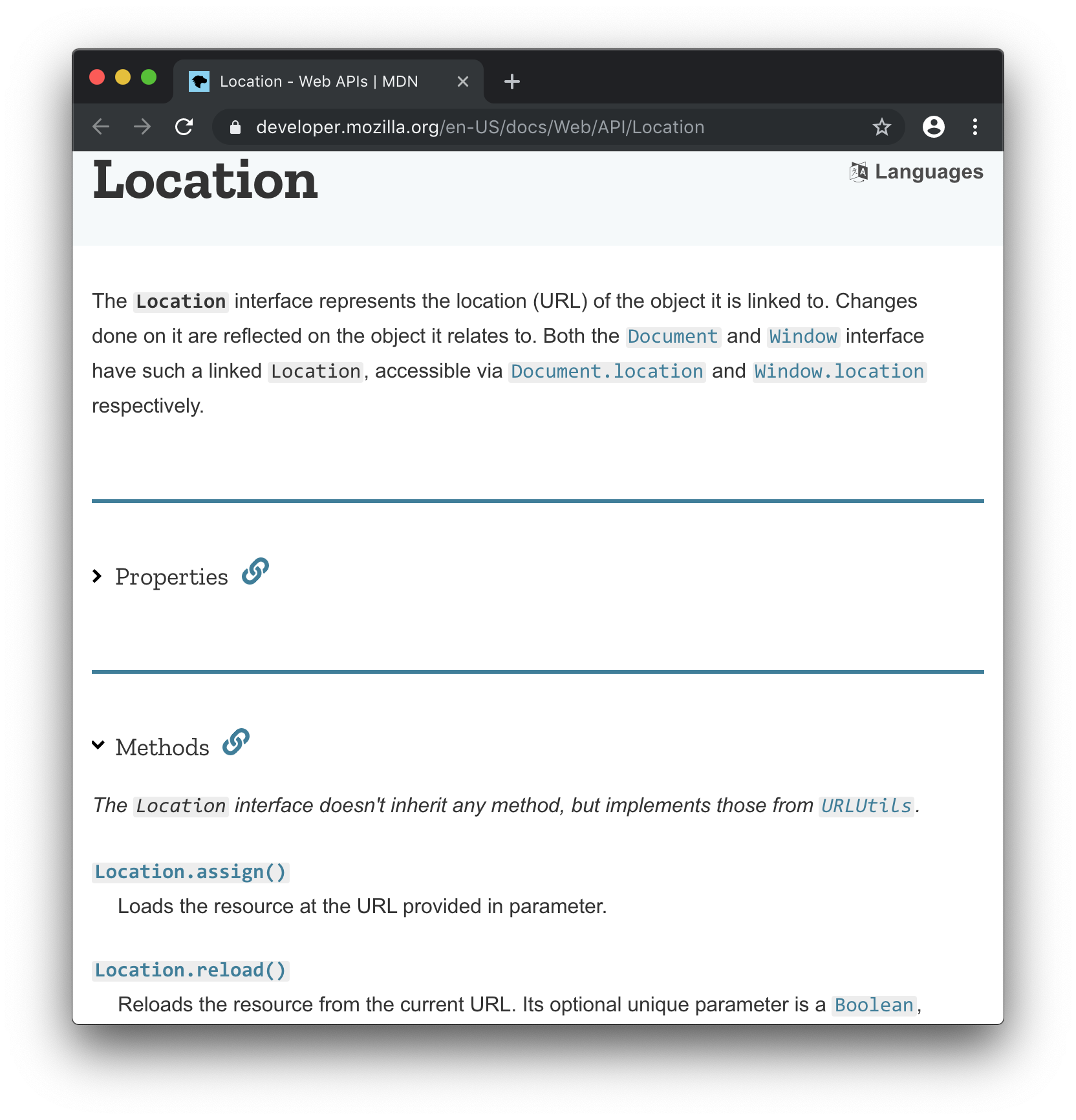

Each link brings you to the documentation of a single Javascript class, which has a short description for the class and a list of properties and methods, each with their own description:

This data is only semi-structured: as it is hand-written, not every page follows exactly the same layout, some pages will have missing sections while others might have extra detail that the documentation author thought would help clarify usage of these APIs. Nevertheless, this semi-structured information can still be very useful: perhaps you want to integrate it into your editor to automatically provide some hints and tips while you are working on your own Javascript code.

How can we convert this semi-structured human-readable MDN documentation website into something structured and machine-readable? Our approach will be as follows:

Scrape the main index page at https://developer.mozilla.org/en-US/docs/Web/API to find a list of URLs to all other pages we might be interested in

Loop through each individual URL and scrape the relevant summary documentation from that page: documentation for the interface, each method, each property, etc.

Aggregate all the scraped summary documentation and save it to a file as JSON, for use later.

To begin with, we can right-click and Inspect the top-level index containing the links to each individual page:

From here, we can see that the <h2 id="Interfaces"> header can be used to identify the section we care about, all of the <a> links are under the <div

class="index" ...> that's below it. We can thus select all those links via:

@ val doc = Jsoup.connect("https://developer.mozilla.org/en-US/docs/Web/API").get()

doc: nodes.Document = <!doctype html>

<html lang="en" dir="ltr" class="no-js">

<head prefix="og: http://ogp.me/ns#">

<meta charset="utf-8">

...

@ val links = doc.select("h2#Interfaces").nextAll.select("div.index a")

links: select.Elements = <a href="/en-US/docs/Web/API/ANGLE_instanced_arrays" title="The ANGLE_instanced_arrays extension is part of the WebGL API and allows to draw the same object, or groups of similar objects multiple times, if they share the same vertex data, primitive count and type."><code>ANGLE_instanced_arrays</code></a>

<a href="/en-US/docs/Web/API/AbortController" title="The AbortController interface represents a controller object that allows you to abort one or more DOM requests as and when desired."><code>AbortController</code></a>

<a href="/en-US/docs/Web/API/AbortSignal" title="The AbortSignal interface represents a signal object that allows you to communicate with a DOM request (such as a Fetch) and abort it if required via an AbortController object."><code>AbortSignal</code></a>

<a href="/en-US/docs/Web/API/AbsoluteOrientationSensor" title="The AbsoluteOrientationSensor interface of the Sensor APIs describes the device's physical orientation in relation to the Earth's reference coordinate system."><code>AbsoluteOrientationSensor</code></a>

...

Note that while there appears to be a <div id="collapse-1"> in the browser console when we inspect the element, that div does not appear in the HTML we receive via Jsoup.connect. This is because that div is added via Javascript, which Jsoup does not support. In general, pages using Javascript may appear slightly different in Jsoup.connect from what you see in the browser, and you may need to dig around in the REPL with .select to find what you want.

From these elements, we can then extract the high-level information we want from each link: the URL, the mouse-over title, and the name of the page:

@ val linkData = links.asScala.map(link => (link.attr("href"), link.attr("title"), link.text))

linkData: collection.mutable.Buffer[(String, String, String)] = ArrayBuffer(

(

"/en-US/docs/Web/API/ANGLE_instanced_arrays",

"The ANGLE_instanced_arrays extension is part of the WebGL API and allows to draw the same object, or groups of similar objects multiple times, if they share the same vertex data, primitive count and type.",

"ANGLE_instanced_arrays"

),

(

"/en-US/docs/Web/API/AbortController",

"The AbortController interface represents a controller object that allows you to abort one or more DOM requests as and when desired.",

"AbortController"

),

(

"/en-US/docs/Web/API/AbortSignal",

"The AbortSignal interface represents a signal object that allows you to communicate with a DOM request (such as a Fetch) and abort it if required via an AbortController object.",

"AbortSignal"

),

...

From there, we can look into scraping data off of each individual page.

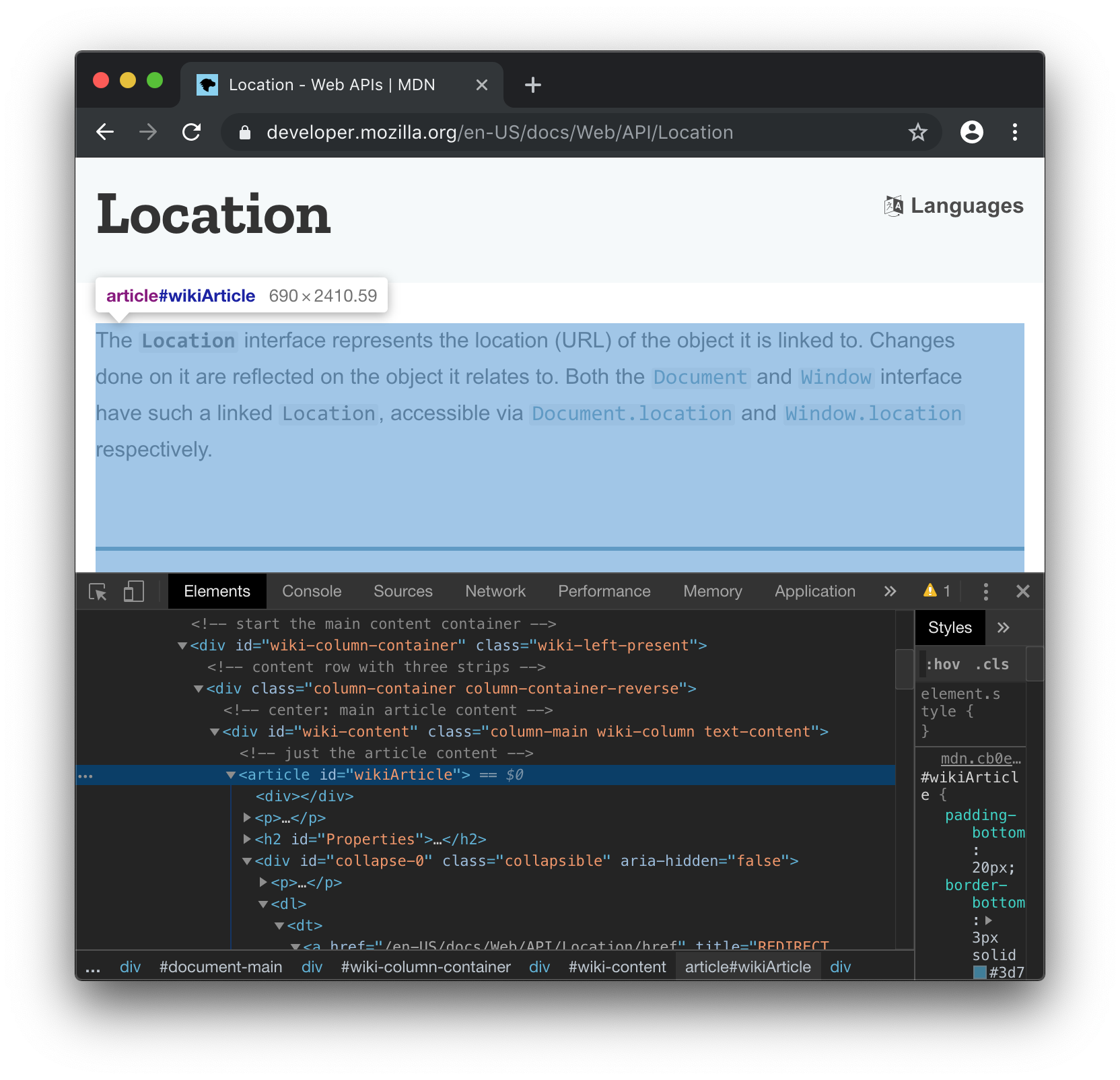

Let's go back to the Location page we saw earlier:

If we inspect the HTML of the page, we can see that the main page contents is within an <article id="wikiArticle"> tag:

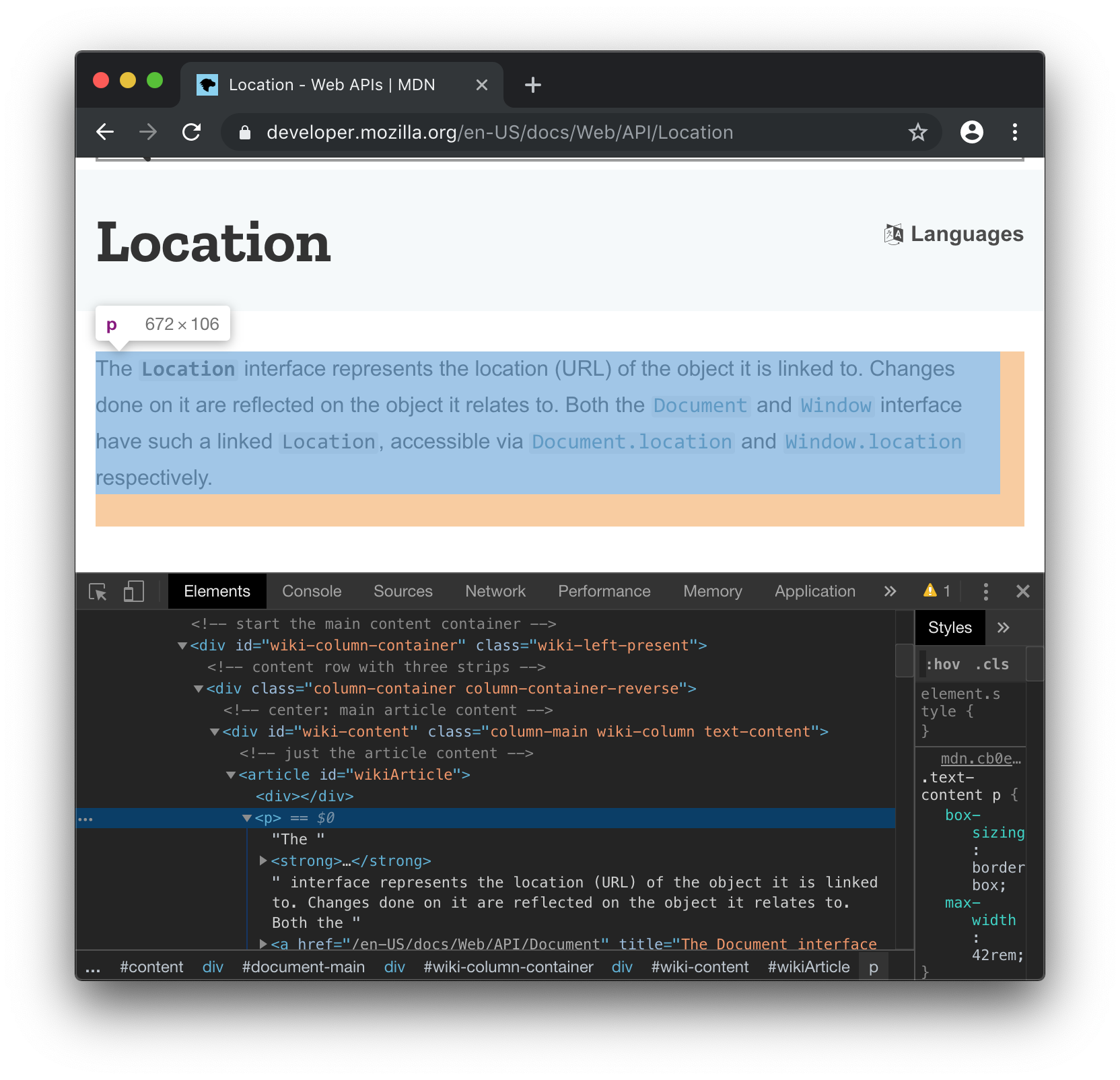

The summary text for this Location page is simply the first <p> paragraph tag within:

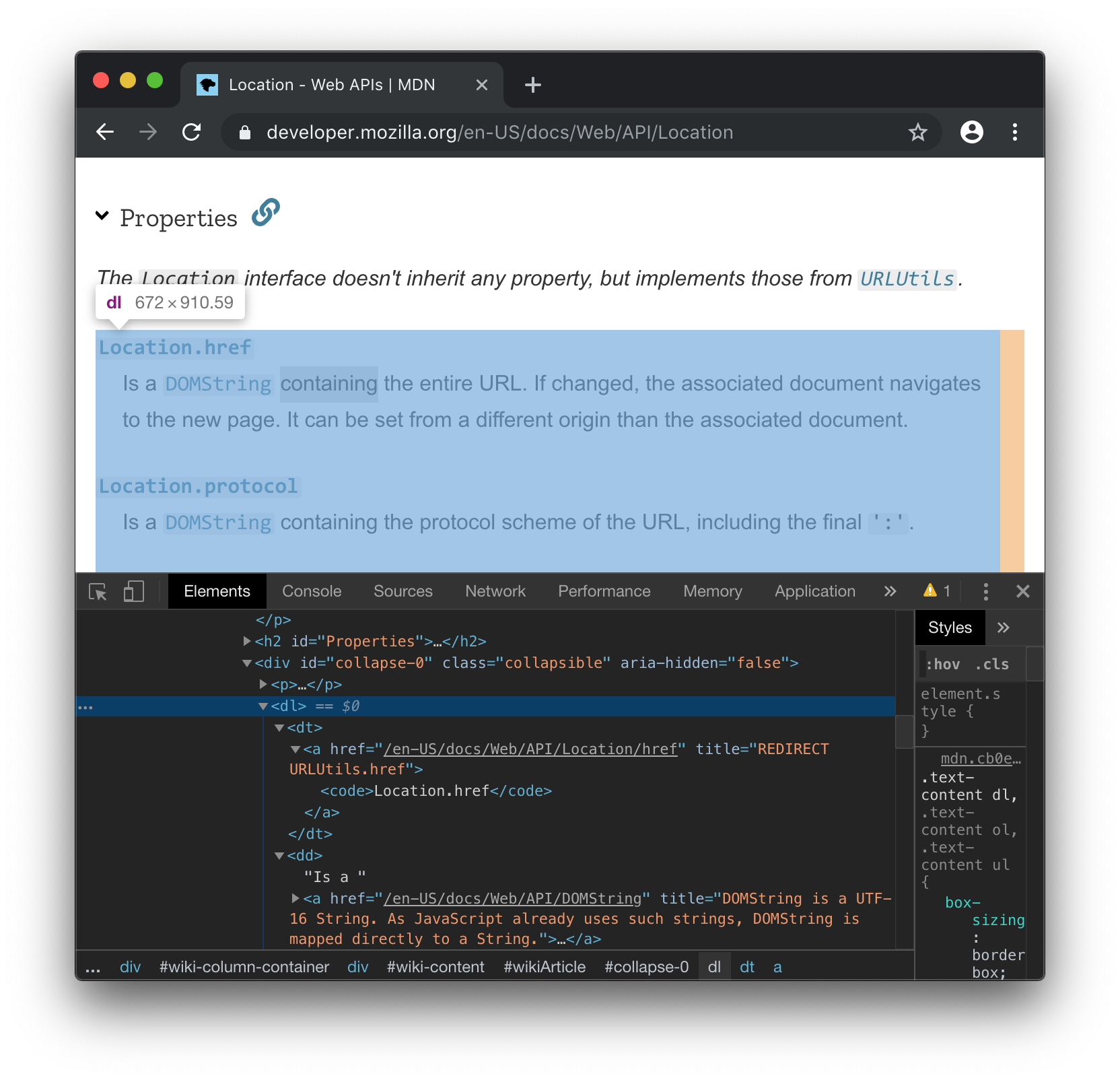

And the name and text for each property and method are within a <dl> definition list, as pairs of <dt> and <dd> tags:

@ val doc = Jsoup.connect("https://developer.mozilla.org/en-US/docs/Web/API/Location").get()

doc: nodes.Document = <!doctype html>

<html lang="en" dir="ltr" class="no-js">

<head prefix="og: http://ogp.me/ns#">

...

We can thus use the article#wikiArticle > p selector to find all relevant paragraphs (Note we only want the direct children of #wikiArticle, hence the >) and .head to find the first paragraph:

@ doc.select("article#wikiArticle > p").asScala.head.text

res39: String = "The Location interface represents the location (URL) of the object it is linked to. Changes done on it are reflected on the object it relates to. Both the Document and Window interface have such a linked Location, accessible via Document.location and Window.location respectively."

And the article#wikiArticle dl dt selector to find all the tags containing the name of a property or method:

@ val nameElements = doc.select("article#wikiArticle dl dt")

nameElements: select.Elements = <dt>

<a href="/en-US/docs/Web/API/Location/href" title="REDIRECT URLUtils.href"><code>Location.href</code></a>

</dt>

<dt>

<a href="/en-US/docs/Web/API/Location/protocol" title="REDIRECT URLUtils.protocol"><code>Location.protocol</code></a>

</dt>

<dt>

...

And the .nextElementSibling of each to find the tag containing the description:

@ val nameDescPairs = nameElements.asScala.map(element => (element, element.nextElementSibling))

nameDescPairs: collection.mutable.Buffer[(nodes.Element, nodes.Element)] = ArrayBuffer(

(

<dt>

<a href="/en-US/docs/Web/API/Location/href" title="REDIRECT URLUtils.href"><code>Location.href</code></a>

</dt>,

<dd>

Is a

<a href="/en-US/docs/Web/API/DOMString" title="DOMString is a UTF-16 String. As JavaScript already uses such strings, DOMString is mapped directly to a String."><code>DOMString</code></a> containing the entire URL. If changed, the associated document navigates to the new page. It can be set from a different origin than the associated document.

</dd>

),

...

And finally calling .text to get the raw text of each:

@ val textPairs = nameDescPairs.map{case (k, v) => (k.text, v.text)}

textPairs: collection.mutable.Buffer[(String, String)] = ArrayBuffer(

(

"Location.href",

"Is a DOMString containing the entire URL. If changed, the associated document navigates to the new page. It can be set from a different origin than the associated document."

),

(

"Location.protocol",

"Is a DOMString containing the protocol scheme of the URL, including the final ':'."

),

(

"Location.host",

"Is a DOMString containing the host, that is the hostname, a ':', and the port of the URL."

),

...

We now have snippets of code that let us scrape the index of Web APIs from MDN, giving us a list of every API documented:

@ val doc = Jsoup.connect("https://developer.mozilla.org/en-US/docs/Web/API").get()

@ val links = doc.select("h2#Interfaces").nextAll.select("div.index a")

@ val linkData = links.asScala.map(link => (link.attr("href"), link.attr("title"), link.text))

linkData: collection.mutable.Buffer[(String, String, String)] = ArrayBuffer(

(

"/en-US/docs/Web/API/ANGLE_instanced_arrays",

"The ANGLE_instanced_arrays extension is part of the WebGL API and allows to draw the same object, or groups of similar objects multiple times, if they share the same vertex data, primitive count and type.",

"ANGLE_instanced_arrays"

),

(

"/en-US/docs/Web/API/AbortController",

"The AbortController interface represents a controller object that allows you to abort one or more DOM requests as and when desired.",

"AbortController"

),

...

And also to scrape the page of each individual API, fetching summary documentation for both that API and each individual property and method:

@ val doc = Jsoup.connect("https://developer.mozilla.org/en-US/docs/Web/API/Location").get()

@ doc.select("article#wikiArticle > p").asScala.head.text

res39: String = "The Location interface represents the location (URL) of the object it is linked to. Changes done on it are reflected on the object it relates to. Both the Document and Window interface have such a linked Location, accessible via Document.location and Window.location respectively."

@ val nameElements = doc.select("article#wikiArticle dl dt")

@ val nameDescPairs = nameElements.asScala.map(element => (element, element.nextElementSibling))

@ val textPairs = nameDescPairs.map{case (k, v) => (k.text, v.text)}

textPairs: collection.mutable.Buffer[(String, String)] = ArrayBuffer(

(

"Location.href",

"Is a DOMString containing the entire URL. If changed, the associated document navigates to the new page. It can be set from a different origin than the associated document."

),

(

"Location.protocol",

"Is a DOMString containing the protocol scheme of the URL, including the final ':'."

),

...

We can thus combine both of these together into a single piece of code that will loop over the pages linked from the index, and then fetch the summary paragraph and method/property details for each documented API:

@ {

import $ivy.`org.jsoup:jsoup:1.12.1`, org.jsoup._

import collection.JavaConverters._

val indexDoc = Jsoup.connect("https://developer.mozilla.org/en-US/docs/Web/API").get()

val links = indexDoc.select("h2#Interfaces").nextAll.select("div.index a")

val linkData = links.asScala.map(link => (link.attr("href"), link.attr("title"), link.text))

val articles = for((url, tooltip, name) <- linkData) yield {

println("Scraping " + name)

val doc = Jsoup.connect("https://developer.mozilla.org" + url).get()

val summary = doc.select("article#wikiArticle > p").asScala.headOption.fold("")(_.text)

val methodsAndProperties = doc

.select("article#wikiArticle dl dt")

.asScala

.map(elem => (elem.text, elem.nextElementSibling match {case null => "" case x => x.text}))

(url, tooltip, name, summary, methodsAndProperties)

}

}

Note that I added a bit of error handling in here: rather than fetching the summary text via .head.text, I use .headOption.fold("")(_.text) to account for the possibility that there is no summary paragraph. Similarly, I check .nextElementSibling to see if it is null before calling .text to fetch its contents. Other than that, it is essentially the same as the snippets we saw earlier.

This should take a few minutes to run, as it has to fetch every page individually to parse and extract the data we want. After it's done, we should see the following output:

articles: collection.mutable.Buffer[(String, String, String, String, collection.mutable.Buffer[(String, String)])] = ArrayBuffer(

(

"/en-US/docs/Web/API/ANGLE_instanced_arrays",

"The ANGLE_instanced_arrays extension is part of the WebGL API and allows to draw the same object, or groups of similar objects multiple times, if they share the same vertex data, primitive count and type.",

"ANGLE_instanced_arrays",

"The ANGLE_instanced_arrays extension is part of the WebGL API and allows to draw the same object, or groups of similar objects multiple times, if they share the same vertex data, primitive count and type.",

ArrayBuffer(

(

"ext.VERTEX_ATTRIB_ARRAY_DIVISOR_ANGLE",

"Returns a GLint describing the frequency divisor used for instanced rendering when used in the gl.getVertexAttrib() as the pname parameter."

),

(

"ext.drawArraysInstancedANGLE()",

"Behaves identically to gl.drawArrays() except that multiple instances of the range of elements are executed, and the instance advances for each iteration."

),

(

...

articles contains the first-paragraph summary of every documentation. We can see how many articles we have scraped in total:

@ articles.length

res60: Int = 917

As well as how many member and property documentation snippets we have fetched.

@ articles.map(_._5.length).sum

res61: Int = 16583

Lastly, if we need to use this information elsewhere, it is easy to dump to a JSON file that can be accessed from some other process:

@ os.write.over(os.pwd / "docs.json", upickle.default.write(articles, indent = 4))

@ os.read(os.pwd / "docs.json")

res65: String = """[

[

"/en-US/docs/Web/API/ANGLE_instanced_arrays",

"The ANGLE_instanced_arrays extension is part of the WebGL API and allows to draw the same object, or groups of similar objects multiple times, if they share the same vertex data, primitive count and type.",

"ANGLE_instanced_arrays",

"The ANGLE_instanced_arrays extension is part of the WebGL API and allows to draw the same object, or groups of similar objects multiple times, if they share the same vertex data, primitive count and type.",

[

[

"ext.VERTEX_ATTRIB_ARRAY_DIVISOR_ANGLE",

"Returns a GLint describing the frequency divisor used for instanced rendering when used in the gl.getVertexAttrib() as the pname parameter."

],

[

"ext.drawArraysInstancedANGLE()",

"Behaves identically to gl.drawArrays() except that multiple instances of the range of elements are executed, and the instance advances for each iteration."

],

[

"ext.drawElementsInstancedANGLE()",

...

In this tutorial, we have walked through the basics of using the Scala programming language and Jsoup HTML parser to scrape semi-structured data off of human-readable HTML pages: specifically taking the well-known MDN Web API Documentation, and extracting summary documentation for every interface, method and property documented within it. We did so interactively in the REPL, and were able to see immediately what our code did to extract information from the semi-structured human-readable HTML. For re-usability, you may want to place the code in a Scala Script, or for more sophisticated scrapers use a proper build tool like Mill.

This post only covers the basics of web scraping: for websites that need user accounts and logic, you may need to use Requests-Scala to go through the HTTP signup flow and maintain a user session. For websites that require Javascript to function, you may need a more fully-featured browser automation tool like Selenium. Nevertheless, this should be enough to get you started with the basic concepts of fetching, navigating and scraping HTML websites using Scala.

About the Author: Haoyi is a software engineer, and the author of many open-source Scala tools such as the Ammonite REPL and the Mill Build Tool. If you enjoyed the contents on this blog, you may also enjoy Haoyi's book Hands-on Scala Programming